It was a conceptually dense week in class. The first part of the week I spent talking about topics such as ecological complexity, vulnerability, adaptation, and resilience. One of the key take-home messages of this material is that uncertainty is ubiquitous in complex ecological systems. Now, while systemic uncertainty does not mean that the world is unpatterned or erratic, it does mean that people are never sure what their foraging returns will be or whether they will come down with the flu next week or whether their neighbor will support them or turn against them in a local political fight. Because uncertainty is so ubiquitous, I see it as especially important for understanding human evolution and the capacity for adaptation. In fact, I think it's so important a topic that I'm writing a book about it. More on that later...

First, it's important to distinguish two related concepts. Uncertainty simply means that you don't know the outcome of a process with 100% certainty. Outcomes are probabilistic. Risk, on the other hand, combines both the likelihood of a negative outcome and the outcome's severity. There could be a mildly negative outcome that has a very high probability of occurring and we would probably think that it was less risky than a more severe outcome that happened with lower probability. When a forager leaves camp for a hunt, he does not know what return he will get. 10,000 kcal? 5,000 kcal? 0 kcal? This is uncertainty. If the hunter's children are starving and might die if he doesn't return with food, the outcome of returning with 0 kcal worth of food is risky as well.

Human behavioral ecology has a number of elements that distinguish it as an approach to studying human ecology and decision-making. These features have been discussed extensively by Bruce Winterhalder and Eric Smith (1992, 2000), among others. Included among these are: (1) the logic of natural selection, (2) hypothetico-deductive framework, (3) a piecemeal approach to understanding human behavior, (4) focus on simple (strategic) models, (5) emphasis on behavioral strategies, (6) methodological individualism. Some others that I would add include: (7) ethological (i.e., naturalistic) data collection, (8) rich ethnographic context, (9) a focus on adaptation and behavioral flexibility in contrast to typology and progressivism. The hypothetico-deductive framework and use of simple models (along with the logic of selection) jointly accounts for the frequent use of optimality models in behavioral ecology. Not to overdo it with the laundry lists, but optimality models also all share some common features. These include: (1) the definition of an actor, (2) a currency and an objective function (i.e., the thing that is maximized), (3) a strategy set or set of alternative actions, and (4) a set of constraints.

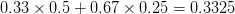

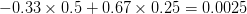

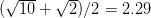

For concreteness' sake, I will focus on foraging in this discussion, though the points apply to other types of problems. When behavioral ecologists attempt to understand foraging decisions, the currency they overwhelmingly favor is the rate of energy gain. There are plenty of good reasons for this. Check out Stephens and Krebs (1986) if you are interested. The point that I want to make here is that, ultimately, it's not the energy itself that matters for fitness. Rather it is what you do with it. How does a successful foraging bout increase your marginal survival probability or fertility rate? This doesn't sound like such a big issue but it has important implications. In particular, fitness (or utility) is a function of energy return. This means that in a variable environment, it matters how we average. Different averages can give different answers. For example, what is the average of the square root of 10 and 2? There are two ways to do this: (1) average the two values and take the square root (i.e., take the function of the mean), and (2) take the square roots and average (i.e., take the mean of the function). The first of these is  . The second is

. The second is  . The function of the mean is greater than the mean of the function. This is a result of Jensen's inequality. The square root function is concave -- it has a negative second derivative. This means that while

. The function of the mean is greater than the mean of the function. This is a result of Jensen's inequality. The square root function is concave -- it has a negative second derivative. This means that while  gets bigger as

gets bigger as  gets bigger (its first derivative is positive), the increase is incrementally smaller as

gets bigger (its first derivative is positive), the increase is incrementally smaller as  gets larger. This is commonly known as diminishing marginal utility.

gets larger. This is commonly known as diminishing marginal utility.

Lots of things naturally show diminishing marginal gains. Imagine foraging for berries in a blueberry bush when you're really hungry. When you arrive at the bush (i.e., 'the patch'), your rate of energy gain is very high. You're gobbling berries about as fast as you can move your hands from the bush to your mouth. But after you've been there a while, your rate of consumption starts to slow down. You're depleting the bush. It takes longer to pick the berries because you have to reach into the interior of the bush or go around the other side or get down on the ground to get the low-hanging berries.

Chances are, there's going to come a point where you don't think it's worth the effort any more. Maybe it's time to find another bush; maybe you've got other important things to do that are incompatible with berry-picking. In his classic paper, Ric Charnov derived the conditions under which a rate-maximizing berry-picker should move on, the so-called 'marginal value theorem' (abandon the patch when the marginal rate of energy gain equals the mean rate for the environment). There are a number of similar marginal value solutions in ecology and evolutionary biology (they all arise from maximizing some rate or another). Two other examples: Parker derived an marginal value solution for the optimal time that a male dung fly should copulate (can't make this stuff up). van Baalen and Sabelis derived the optimal virulence for a pathogen when the conditional probability of transmission and the contact rate between infectious and susceptible hosts trade off.

So, what does all this have to do with risk? In a word, everything.

Consider a utility curve with diminishing marginal returns. Suppose you are at the mean, indicated by  . Now you take a gamble. If you're successful, you move to

. Now you take a gamble. If you're successful, you move to  and its associated utility. However, if you fail, you move down to

and its associated utility. However, if you fail, you move down to  and its associated utility. These two outcomes are equidistant from the mean. Because the curve is concave, the gain in utility that you get moving from

and its associated utility. These two outcomes are equidistant from the mean. Because the curve is concave, the gain in utility that you get moving from  to

to  is much smaller than the loss you incur moving from

is much smaller than the loss you incur moving from  to

to  . The downside risk is much bigger than the upside gain. This is illustrated in the following figure:

. The downside risk is much bigger than the upside gain. This is illustrated in the following figure:

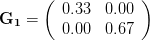

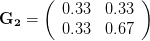

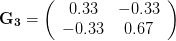

When returns are variable and utility/fitness is a function of returns, we can use expected utility as a tool for understanding optimal decisions. The idea goes back to von Neumann and Morgenstern, the fathers of game theory. Expected utility has received some attention in behavioral ecology, though not as much as it deserves. Stephens and Krebs (1986) discuss it in their definitive book on foraging theory. Bruce Winterhalder, Flora Lu, and Bram Tucker (1999) have discussed expected utility in analyzing human foraging decisions and Bruce has also written with Paul Leslie (2002; Leslie & Winterhalder 2002) on the topic with regard to fertility decisions. Expected utility encapsulates the very sensible idea that when faced with a choice between two options that have uncertain outcomes, choose the one with the higher average payoff. The basic idea is that the world presents variable pay-offs. Each pay-off has a utility associated with it. The best decision is the one that has the highest overall expected, or average, utility associated with it. Consider a forager deciding what type of hunt to undertake. He can go for big game but there is only a 10% chance of success. When he succeeds, he gets 10,000 kcal of energy. When he fails, he can almost always find something else on the way back home to bring to camp. 90% of the time, he will bring back 1,000 kcal. The other option is to go for small game, which is generally much more certain endeavor. 90% of the time, he will net 2,000 units of energy. Such small game is remarkably uniform in its payoff but sometimes (10%) the forager will get lucky and receive 3,000 kcal. We calculate the expected utility by summing the products of the probabilities and the rewards, assuming for simplicity in this case that the utility is simply the energy value (if we didn't make this assumption, we would calculate the utilities associated with the returns first before averaging).

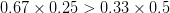

Big Game: 0.1*10000 + 0.9*1000 = 1900

Small Game: 0.9*2000 + 0.1*3000 = 2100

Small game is preferred because it has higher expected utility.

We can do a bit of analysis on our utility curve and show something very important about risk and expected utility. I'll spare the mathematical details, but we can expand our utility function around the mean return using a Taylor series and then calculate expectations (i.e., average) on both sides. The resulting expression encapsulates a lot of the theory of risk management. Let  indicate the utility associated with return

indicate the utility associated with return  (where I follow the population genetics convention that fitness is given by a w).

(where I follow the population genetics convention that fitness is given by a w).

Mean fitness is equal to the fitness of the mean payoff plus a term that includes the variance in  and the second derivative of the utility function. When there is diminishing marginal utility, this will be negative. Therefore, variance will reduce mean fitness below the fitness of the mean. When there is diminishing marginal utility, variance is bad. How bad is determined both by the magnitude of the variance but also by how curved the utility curve is. If there is no curve, utility is a straight line and

and the second derivative of the utility function. When there is diminishing marginal utility, this will be negative. Therefore, variance will reduce mean fitness below the fitness of the mean. When there is diminishing marginal utility, variance is bad. How bad is determined both by the magnitude of the variance but also by how curved the utility curve is. If there is no curve, utility is a straight line and  . In that case, variance doesn't matter.

. In that case, variance doesn't matter.

So variance is bad for fitness. And variance can get big. One can imagine it being quite sensible to sacrifice some mean return in exchange for a reduction in variance if this reduction outweighed the premium paid from the mean. This is exactly what we do when we purchase insurance or when a farmer sells grain futures. This is also something that animals with parental care do. Rather than spewing out millions of gametes in the hope that it will get lucky (e.g., like a sea urchin), animals with parental care use the energy they could spend on lots more gametes and reinvest in ensuring the survival of their offspring. This is probably also why hunter-gatherer women target reliable resources that generally have a lower mean return than other available, but risky, items.

It turns out that humans have all sorts of ways of dealing with risk, some of them embodied in our very biology. I'm going to come up short in enumerating these because this is the central argument of my book manuscript and I don't want to give it away (yet)! I hope to blog here in the near future about three papers that I have nearly completed that deal with risk management and the evolution of social systems, reproductive decision-making in an historical population, and foraging decisions by contemporary hunter-gatherers. When they come out, my blog will be the first to know!

References

Charnov, E. L. 1976. Optimal foraging: The marginal value theorem. Theoretical Population Biology. 9:129-136.

Leslie, P., and B. Winterhalder. 2002. Demographic consequences of unpredictability in fertility outcomes. American Journal of Human Biology. 14 (2):168-183.

Parker, G. A., and R. A. Stuart. 1976. Animal behavior as a strategy optimizer: evolution of resource assessment strategies and optimal emigration thresholds. American Naturalist. 110 (1055-1076).

Stephens, D. W., and J. R. Krebs. 1986. Foraging theory. Princeton: Princeton University Press.

van Baalen, M., and M. W. Sabelis. 1995. The dynamics of multiple infection and the evolution of virulence. American Naturalist. 146 (6):881-910.

Winterhalder, B., and P. Leslie. 2002. Risk-sensitive fertility:The variance compensation hypothesis. Evolution and Human Behavior. 23:59-82.

Winterhalder, B., F. Lu, and B. Tucker. 1999. Risk-sensitive adaptive tactics: Models and evidence from subsistence studies in biology and anthropology. Journal of Archaeological Research. 7 (4):301-348.

Winterhalder, B., and E. A. Smith. 2000. Analyzing adaptive strategies: Human behavioral ecology at twenty-five. Evolutionary Anthropology. 9 (2):51-72.